We study the problem of embodied depth prediction, where an embodied agent in an environment must learn to accurately estimate the depth of its surroundings. Such a task can be useful for embodied AI where it is highly desirable to accurately predict 3D structure when deploying robots in novel environments. However, directly using existing pre-trained depth prediction models in this setting is difficult as images are often captured in out-of-distribution viewpoints. Instead, it is important to construct a system that may adapt and learn depth prediction by interacting and gathering information from the environment and which may utilize the rich information in past observations captured from ego-motion. Towards this problem, we propose a framework for actively interacting with the environment to learn depth prediction, leveraging both explorations of new areas of space and exploration of areas of space where depth prediction is inconsistent. To exploit the rich information captured from past observations in the embodied setting, we further jointly utilize current and past image observations and their corresponding egomotions to predict depth. We illustrate the efficacy of our approach in obtaining accurate depth predictions in both simulated and real household environments.

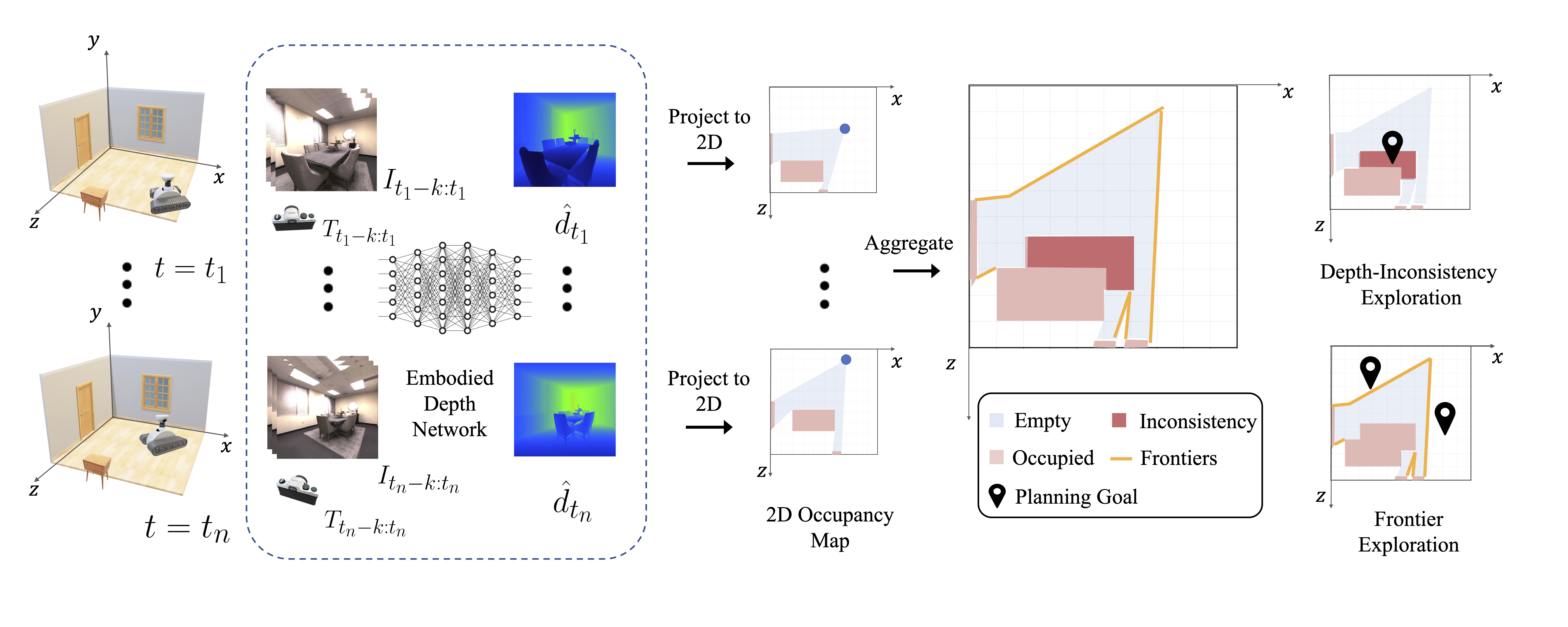

Our approach to embodied depth prediction gathers and maintains a buffer of RGB images from the environment. A set of contiguous data including images and their associated ego-motion are sampled from the replay buffer and used to generate the depth prediction with Embodied Depth Network that is trained using photometric loss. More images are gathered with an active policy using a combination of frontier exploration and depth-inconsistency between predicted depth at nearby RGB observations.

Here we show how our active policy guides the data collection in simulation. Even if the network outputs an ambiguous prediction, our strategy can still guide the collection.

Below, we show the depth prediction visualization in simulation. Our proposed approach demonstrates better accuracy and consistency in producing depth maps compared to the conventional random exploration strategy with Monodepth2. This can be attributed to the enhanced exploration capabilities of our active policy, which also leverages multi-frame inputs to effectively overcome limitations associated with single-frame inputs.

|

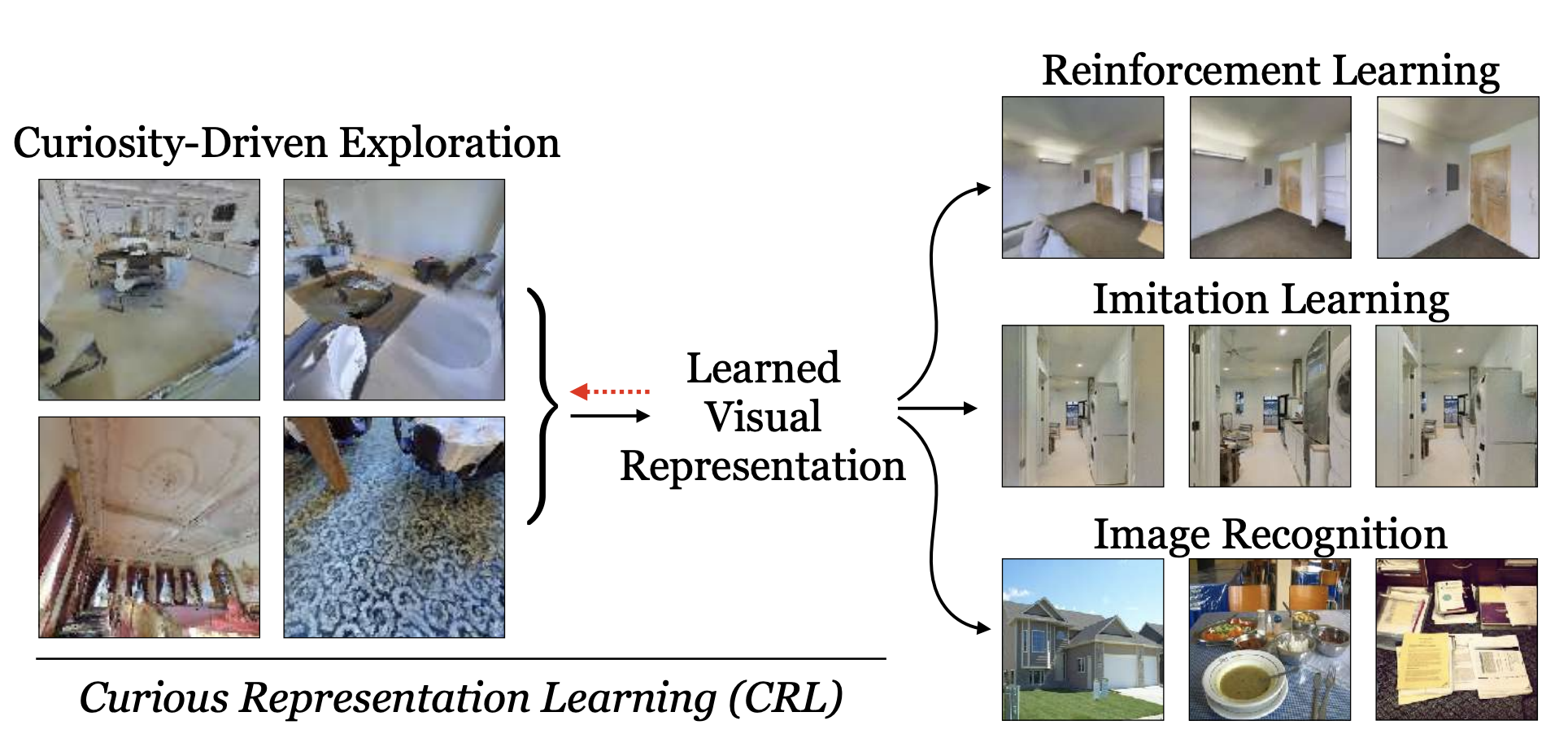

We introduce CRL, an approach to embodied representation learning in which a representation learning model plays a minimax game with an exploration policy. A exploration policy learns to explore the surrounding environment to maximizing contrastive loss. A representation learning model is then learned on the diverse gathered images. |